Is your mobile app ‘listening’?

You’ve probably used Voice Technology at least once today. From ‘Hey Siri, set an alarm’ to ‘Alexa play my song’, voice commands have become as ordinary as breakfast. 20% of all Google App searches are done by using voice.

And that’s not all. There’s also Nina, Silvia, Dragon, Jibo, Vokul, Samsung Bixby, Alice, and Braina! According to Techcrunch, “smart devices like the Amazon Echo, Google Home and Sonos One will be installed in a majority – that is, 55 percent – of U.S. households by the year 2022”. In just 2021, the number of voice tech users in the United States of America has grown by 128.9%.

You must however have felt that when using mobile apps, not all apps offer voice navigation, at least not effectively enough. Seeing what a crowd-pleaser voice technology is, mobile app developers truly need to step up their game and develop better voice interfaces.

This blogpost will discuss the popularity of voice tech in mobile apps, what makes it tick, and how to strengthen the voice ecosystem to make mobile apps more conversational.

For the better part of nineteenth century, we were awed enough that we could talk to a friend continents apart using a phone. But now, we can actually talk to the phone. So you can ask Siri to call your mom, Cortana to order a pizza, or make Alexa dim the lights and play you a song.

“40% of adults now use voice search once per day,” according to CampaignLive.

The human species first learned to communicate through speech and later came text. In case of technology, however, things took place the other way round. We have been operating our computers and phones by using text for a long time, and only recently has voice become a sizeable medium for human-computer interactions. With the rise of smart speakers like Siri, Cortana, Alexa, and Amazon Echo, voice search and voice commands have made their way into our daily lives. It’s no wonder then that Amazon sold 4.4 million Echo units in its first full year of sales, says GeekWire.

And why not? Voice tech has incredible advantages to offer in mobile app development. In addition to amazing user experience and faster search results, voice search allows users to multitask, and build strong customer relationships.

So why do mobile apps need voice navigation?

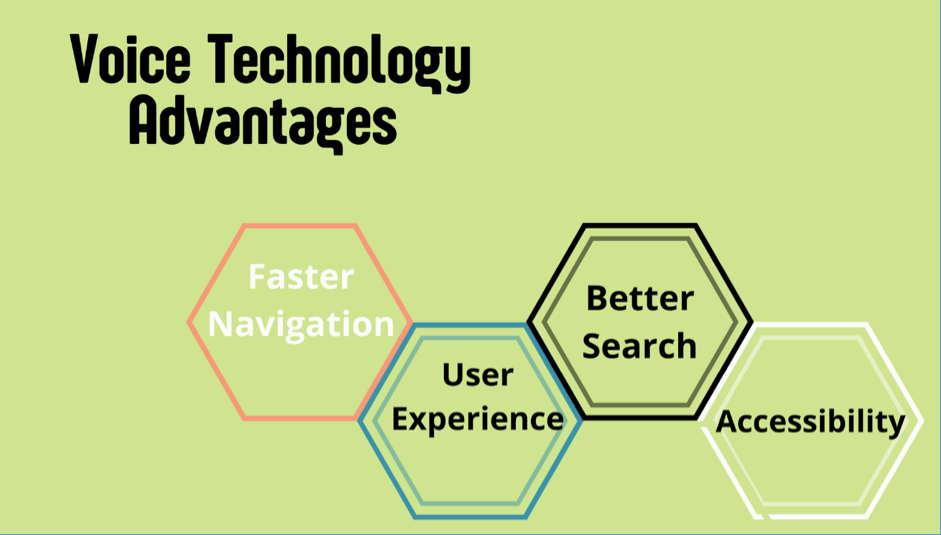

Let’s take a look at the biggest advantages of voice technology for mobile apps:

Faster navigation

It’s simply easier to ask for something than to have to type it out. A study by Stanford researchers found that people can speak up to three times faster than they can type on a mobile device. The mere act of flipping out your phone, unlocking it, opening your choice of app and then typing out every single letter can seem like an awful lot of work for today’s savvy customers who are now being pampered by the magic-like powers of ‘OK Google’ and ‘Hey Siri’. Voice navigation helps mobile apps pamper their users the same way, getting things done faster and providing delightful user experiences.

Multitasking

Forgot the next step in the recipe with your hands deep in flour and eggs? Imagine typing a query right now! Voice technology lets users multitask efficiently by letting them use their mobile apps while handling other tasks, be it commuting, exercising, or of course, baking shortbread cookies.

Element of delight

Since we’ve been typing in commands for over two decades now, voice commands carry an element of delight for users. Just saying what you want and having the mobile app do it for you makes users happy, obviously increasing engagement and stickiness.

Better search

Voice control mobile apps offer a much better search experience. Instead of users having to specify their search criteria and then specify categories, voice search can be designed to show results more precisely. When designing voice commands, you can define synonyms so that users get the desired results no matter what they say.

Increased inclusivity and accessibility

Voice control mobile apps are more accessible for all people including the especially abled. People with limited visual or motor capabilities may find voice commands easier than typing. Voice tech in multiple languages also makes your mobile app more inclusive for people of varying languages, while typing in different languages can be a tedious process.

To sum it up, including voice navigation and voice search in your mobile app can have many benefits for mobile app user experience and increasing engagement on your app.

Artificial intelligence and natural language processing have become highly sophisticated, and voice technology is now hugely popular on Google and Apple. Yet, the dilemma remains that very few mobile apps offer adequate voice controlled features. Ok Google and Hey Siri don’t work inside specific apps and these specific apps have limited or no voice interfaces. Those that do aren’t at par with the voice experience users are accustomed to on Google and Apple.

This needs to change. Understanding the challenges in developing efficient voice integrated mobile apps can help us build solutions that last.

Some of the challenges to voice tech are discussed here.

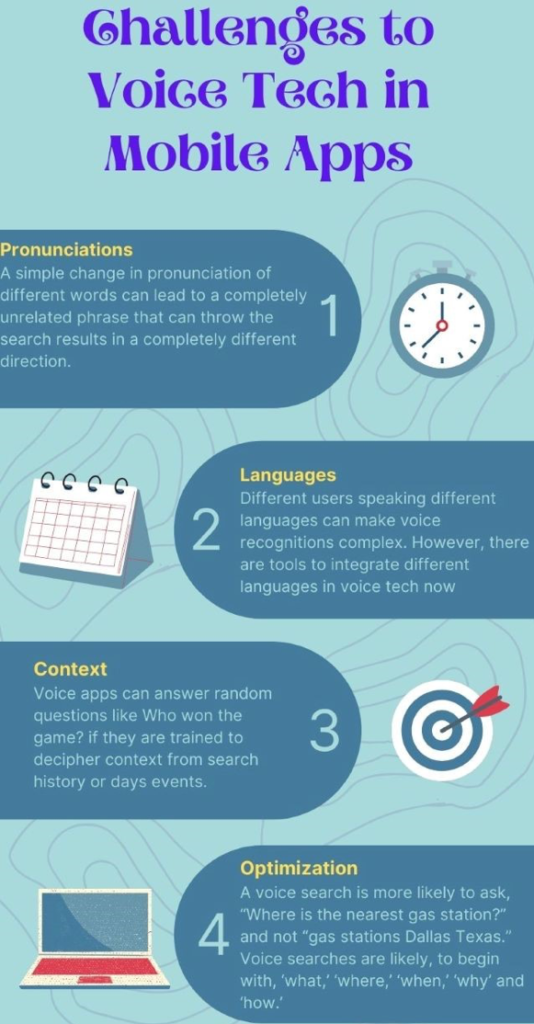

1. Pronunciations

A simple change in pronunciation of different words can lead to a completely unrelated phrase that can throw the search results in a completely different direction.

2. Languages

Different users speaking different languages can make voice recognitions complex. However, there are tools to integrate different languages in voice tech now.

3. Context

Q: Who won today’s game?

A: I’m sorry, I can’t answer that.

It wouldn’t be anyone’s fault to not be able to answer that vague question. However, if voice technology can be trained to remember context, it is possible that you may have asked the voice assistant something else about a game earlier in the day, and that would enable the app to correctly answer your question. Or if the voice assistant was capable of knowing your media activity, it might know what game you were watching.

Answering any question is difficult without context. Building voice assistants in a way that they can gather meaningful context from your activity is possible, albeit difficult.

4. Optimization

Optimizing your mobile app for voice control involves significantly rethinking the way we have been searching all these days via text. While texting, people tend to enter queries like ‘restaurants near me’. On voice, they’re more likely to say “suggest a good restaurant?”

These subtle differences need to be carefully thought over to design efficient voice experiences on mobile apps.

Mobile app developers and app marketers can use voice search to their advantage by just making a few changes in their marketing strategy. All this time, keywords have been at the very center of app marketing and SEO. However, when a user performs a voice search, he or she is more likely to ask, “Where is the nearest gas station?” and not “gas stations Dallas Texas.” Most long-tail voice searches are likely, to begin with, ‘what,’ ‘where,’ ‘when,’ ‘why’ and ‘how.’ Marketers need to align their search results based on these questions for their content or app to show up in voice search results.

Opportunities in Voice Tech for Mobile Apps

Google has become incredibly intelligent and intuitive over the years and search results are insanely precise and accurate today. Clickbait content and low-quality keyword-stuffed content has absolutely no place in the SERPs. Short and quick queries like unit conversions, international time, movie actors’ names, and other precise questions get answered right at the auto-complete stage in the address bar. In an age of such precision, voice search is set to make results even more conversational.

So with consumers increasingly turning to intelligent assistants like Siri, Cortana and Alexa, mobile app developers too must integrate voice search in their apps to provide quicker, sharper and more conversational results. In fact, Google OK is set to revolutionize in-app search with voice. It is imperative that all mobile app development taking place now must be voice-search-compatible. If your app is a restaurant finder, the user should be able to bring the phone or watch to their mouth and say, “Show me some good T-Shirts” and your app should be able to display in-app results immediately.

Conclusion

Voice based interactions with mobile apps are faster, easier, and more convenient. Clearly, mobile app developers need to align with public sentiment and build mobile app experiences that are conversational and interactive. If your app is a good listener, your users will spend more time talking to it, obviously helping you better market your services and build stronger customer relationships. While there are challenges, natural language processing and artificial intelligence have come a long way in addressing a majority of them. With a bit of learning and perseverance, you can build a mobile app that offers user experiences that keep them coming back to you.